Why is there 8 bits in a byte?

Innehållsförteckning

- Why is there 8 bits in a byte?

- Why is it 8-bit?

- Is binary always 8-bit?

- How many bytes is a 8-bit?

- Does 8 bytes equal 1 bit?

- What is 8 bits of data?

- What does 8-bit mean in a microcontroller?

- What is an 8-bit system?

- Can a byte not be 8 bits?

- How do you represent 8 in a byte?

- Why is the number of bits in a byte 8?

- What is the smallest number of bits in a character?

- What was the first computer with an 8-bit character?

- What would happen if we had only 4 bits per character?

Why is there 8 bits in a byte?

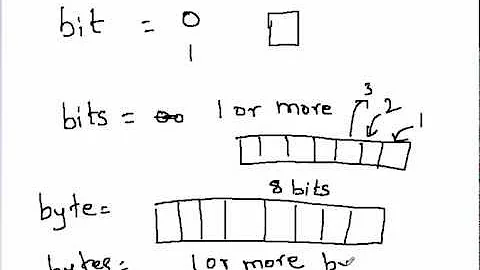

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures.

Why is it 8-bit?

The true definition of the descriptor “8-bit” refers to the processors that powered consoles like the NES and Atari 7800 and has nothing to do with the graphics of the games they ran. ... So what does the eight mean in 8-bit? That refers to the size of the unit of data a computer can handle, or its “word size.”

Is binary always 8-bit?

It is a good shorthand to know. In the electronics world, each binary digit is commonly referred to as a bit. A group of eight bits is called a byte and four bits is called a nibble....Binary Numbers.

| Decimal, Base 10 | Hexadecimal, Base 16 |

|---|---|

| 8 | 8 |

| 9 | 9 |

| 10 | A |

| 11 | B |

How many bytes is a 8-bit?

Bytes to Bits Conversion Table

| Bytes (B) | Bits (b) |

|---|---|

| 6 Bytes | 48 bits |

| 7 Bytes | 56 bits |

| 8 Bytes | 64 bits |

| 9 Bytes | 72 bits |

Does 8 bytes equal 1 bit?

On almost all modern computers, a byte is equal to 8 bits. Large amounts of memory are indicated in terms of kilobytes, megabytes, and gigabytes.

What is 8 bits of data?

8-bit is a measure of computer information generally used to refer to hardware and software in an era where computers were only able to store and process a maximum of 8 bits per data block.

What does 8-bit mean in a microcontroller?

The term “8-bit” generally refers to the bit-width of the CPU. Thus an 8-bit microcontroller is one which contains an 8-bit CPU. This means that internal operations are done on 8-bit numbers, that stored variables are in 8-bit blocks, and external I/O (inputs/outputs) is accessed via 8-bit busses.

What is an 8-bit system?

8-bit is a measure of computer information generally used to refer to hardware and software in an era where computers were only able to store and process a maximum of 8 bits per data block. This limitation was mainly due to the existing processor technology at the time, which software had to conform with.

Can a byte not be 8 bits?

A byte is usually defined as the smallest individually addressable unit of memory space. It can be any size. There have been architectures with byte sizes anywhere between 6 and 9 bits, maybe even bigger.

How do you represent 8 in a byte?

The largest number you can represent with 8 bits is 11111111, or 255 in decimal notation. Since 00000000 is the smallest, you can represent 256 things with a byte. (Remember, a bite is just a pattern.

Why is the number of bits in a byte 8?

- The byte was originally the smallest number of bits that could hold a single character (I assume standard ASCII). We still use ASCII standard, so 8 bits per character is still relevant.

What is the smallest number of bits in a character?

- The byte was originally the smallest number of bits that could hold a single character (I assume standard ASCII). We still use ASCII standard, so 8 bits per character is still relevant. This sentence, for instance, is 41 bytes. That's easily countable and practical for our purposes.

What was the first computer with an 8-bit character?

- The first computer with an “8 bit character” was the IBM 7030. The customer that insisted was the CIA. Prior to the IBM 7030, all characters were 6 bits. The 7030 had a 64 bit words (plus 8 bits of Error Correction Codes).

What would happen if we had only 4 bits per character?

- If we had only 4 bits, there would only be 16 (2^4) possible characters, unless we used 2 bytes to represent a single character, which is more inefficient computationally.